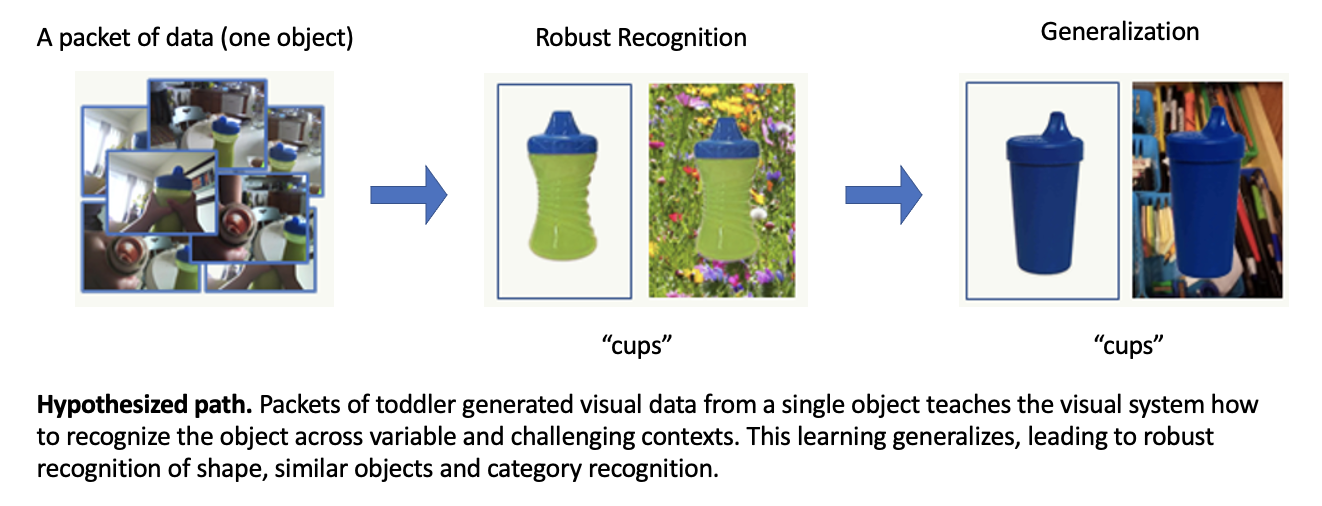

The crux of both human and machine learning is generalization: how can a learning system, biological or artificial, perform well not only on its training examples but also on novel examples and circumstances? One approach, widely used and well supported in both human and machine learning is experience with many training examples. This solution avoids “overfitting” but is slow and incremental. However, in some cases of human learning, generalization requires minimal experience. Evidence of rapid learning from few examples, often called “one-shot” or “few-shot” is particularly well documented in learning visual objects as well as scientific and mathematical concepts. Incremental and one-shot learning have been discussed as distinct mechanisms, but there is growing interest in how one-shot learning might emerge out of prior incremental learning, an idea related to the broader concept of “learning to learn”. The central idea explaining rapid learning from minimal examples is that deep representational principles allow the learner to represent novel examples for appropriate generalization. Thus, most research on one-shot learning – experimental and computational – focuses on the nature of these representations or on the learning machinery. But if one-shot learning is learnable, then an additional core question concerns the kinds of experiencesthat teach an incremental learner to become a one-shot learner. This is our focus. Our main idea is that generalization depends on knowing the allowable and not allowable transformations, for example, the allowable transformations for different views of the same object, for membership in a category, for indicating the same (as in 3-1 and 1+1). We seek to:

- characterize the transformations,

- in time,

- their active generation through behavior,

- the underlying learning (and memory) mechanisms.